Note

Click here to download the full example code

Variational classifier¶

Author: Maria Schuld — Posted: 11 October 2019. Last updated: 19 January 2021.

In this tutorial, we show how to use PennyLane to implement variational quantum classifiers - quantum circuits that can be trained from labelled data to classify new data samples. The architecture is inspired by Farhi and Neven (2018) as well as Schuld et al. (2018).

We will first show that the variational quantum classifier can reproduce the parity function

This optimization example demonstrates how to encode binary inputs into the initial state of the variational circuit, which is simply a computational basis state.

We then show how to encode real vectors as amplitude vectors (amplitude encoding) and train the model to recognize the first two classes of flowers in the Iris dataset.

1. Fitting the parity function¶

Imports¶

As before, we import PennyLane, the PennyLane-provided version of NumPy, and an optimizer.

import pennylane as qml

from pennylane import numpy as np

from pennylane.optimize import NesterovMomentumOptimizer

Quantum and classical nodes¶

We create a quantum device with four “wires” (or qubits).

dev = qml.device("default.qubit", wires=4)

Variational classifiers usually define a “layer” or “block”, which is an elementary circuit architecture that gets repeated to build the variational circuit.

Our circuit layer consists of an arbitrary rotation on every qubit, as well as CNOTs that entangle each qubit with its neighbour.

We also need a way to encode data inputs \(x\) into the circuit, so that the measured output depends on the inputs. In this first example, the inputs are bitstrings, which we encode into the state of the qubits. The quantum state \(\psi\) after state preparation is a computational basis state that has 1s where \(x\) has 1s, for example

We use the BasisState function provided by PennyLane, which expects

x to be a list of zeros and ones, i.e. [0,1,0,1].

def statepreparation(x):

qml.BasisState(x, wires=[0, 1, 2, 3])

Now we define the quantum node as a state preparation routine, followed

by a repetition of the layer structure. Borrowing from machine learning,

we call the parameters weights.

@qml.qnode(dev, interface="autograd")

def circuit(weights, x):

statepreparation(x)

for W in weights:

layer(W)

return qml.expval(qml.PauliZ(0))

Different from previous examples, the quantum node takes the data as a

keyword argument x (with the default value None). Keyword

arguments of a quantum node are considered as fixed when calculating a

gradient; they are never trained.

If we want to add a “classical” bias parameter, the variational quantum classifier also needs some post-processing. We define the final model by a classical node that uses the first variable, and feeds the remainder into the quantum node. Before this, we reshape the list of remaining variables for easy use in the quantum node.

Cost¶

In supervised learning, the cost function is usually the sum of a loss function and a regularizer. We use the standard square loss that measures the distance between target labels and model predictions.

def square_loss(labels, predictions):

loss = 0

for l, p in zip(labels, predictions):

loss = loss + (l - p) ** 2

loss = loss / len(labels)

return loss

To monitor how many inputs the current classifier predicted correctly, we also define the accuracy given target labels and model predictions.

def accuracy(labels, predictions):

loss = 0

for l, p in zip(labels, predictions):

if abs(l - p) < 1e-5:

loss = loss + 1

loss = loss / len(labels)

return loss

For learning tasks, the cost depends on the data - here the features and labels considered in the iteration of the optimization routine.

Optimization¶

Let’s now load and preprocess some data.

Note

The parity dataset can be downloaded

here and

should be placed in the subfolder variational_classifier/data.

data = np.loadtxt("variational_classifier/data/parity.txt")

X = np.array(data[:, :-1], requires_grad=False)

Y = np.array(data[:, -1], requires_grad=False)

Y = Y * 2 - np.ones(len(Y)) # shift label from {0, 1} to {-1, 1}

for i in range(5):

print("X = {}, Y = {: d}".format(X[i], int(Y[i])))

print("...")

Out:

X = [0. 0. 0. 0.], Y = -1

X = [0. 0. 0. 1.], Y = 1

X = [0. 0. 1. 0.], Y = 1

X = [0. 0. 1. 1.], Y = -1

X = [0. 1. 0. 0.], Y = 1

...

We initialize the variables randomly (but fix a seed for reproducibility). The first variable in the list is used as a bias, while the rest is fed into the gates of the variational circuit.

np.random.seed(0)

num_qubits = 4

num_layers = 2

weights_init = 0.01 * np.random.randn(num_layers, num_qubits, 3, requires_grad=True)

bias_init = np.array(0.0, requires_grad=True)

print(weights_init, bias_init)

Out:

[[[ 0.01764052 0.00400157 0.00978738]

[ 0.02240893 0.01867558 -0.00977278]

[ 0.00950088 -0.00151357 -0.00103219]

[ 0.00410599 0.00144044 0.01454274]]

[[ 0.00761038 0.00121675 0.00443863]

[ 0.00333674 0.01494079 -0.00205158]

[ 0.00313068 -0.00854096 -0.0255299 ]

[ 0.00653619 0.00864436 -0.00742165]]] 0.0

Next we create an optimizer and choose a batch size…

opt = NesterovMomentumOptimizer(0.5)

batch_size = 5

…and train the optimizer. We track the accuracy - the share of correctly classified data samples. For this we compute the outputs of the variational classifier and turn them into predictions in \(\{-1,1\}\) by taking the sign of the output.

weights = weights_init

bias = bias_init

for it in range(25):

# Update the weights by one optimizer step

batch_index = np.random.randint(0, len(X), (batch_size,))

X_batch = X[batch_index]

Y_batch = Y[batch_index]

weights, bias, _, _ = opt.step(cost, weights, bias, X_batch, Y_batch)

# Compute accuracy

predictions = [np.sign(variational_classifier(weights, bias, x)) for x in X]

acc = accuracy(Y, predictions)

print(

"Iter: {:5d} | Cost: {:0.7f} | Accuracy: {:0.7f} ".format(

it + 1, cost(weights, bias, X, Y), acc

)

)

Out:

Iter: 1 | Cost: 3.4355534 | Accuracy: 0.5000000

Iter: 2 | Cost: 1.9717733 | Accuracy: 0.5000000

Iter: 3 | Cost: 1.8182812 | Accuracy: 0.5000000

Iter: 4 | Cost: 1.5042404 | Accuracy: 0.5000000

Iter: 5 | Cost: 1.1477739 | Accuracy: 0.5000000

Iter: 6 | Cost: 1.2734990 | Accuracy: 0.6250000

Iter: 7 | Cost: 0.8290628 | Accuracy: 0.5000000

Iter: 8 | Cost: 0.3226183 | Accuracy: 1.0000000

Iter: 9 | Cost: 0.1436206 | Accuracy: 1.0000000

Iter: 10 | Cost: 0.2982810 | Accuracy: 1.0000000

Iter: 11 | Cost: 0.3064355 | Accuracy: 1.0000000

Iter: 12 | Cost: 0.1682335 | Accuracy: 1.0000000

Iter: 13 | Cost: 0.0892512 | Accuracy: 1.0000000

Iter: 14 | Cost: 0.0381562 | Accuracy: 1.0000000

Iter: 15 | Cost: 0.0170359 | Accuracy: 1.0000000

Iter: 16 | Cost: 0.0109353 | Accuracy: 1.0000000

Iter: 17 | Cost: 0.0108388 | Accuracy: 1.0000000

Iter: 18 | Cost: 0.0139196 | Accuracy: 1.0000000

Iter: 19 | Cost: 0.0123980 | Accuracy: 1.0000000

Iter: 20 | Cost: 0.0085416 | Accuracy: 1.0000000

Iter: 21 | Cost: 0.0053549 | Accuracy: 1.0000000

Iter: 22 | Cost: 0.0065759 | Accuracy: 1.0000000

Iter: 23 | Cost: 0.0024883 | Accuracy: 1.0000000

Iter: 24 | Cost: 0.0029102 | Accuracy: 1.0000000

Iter: 25 | Cost: 0.0023471 | Accuracy: 1.0000000

2. Iris classification¶

Quantum and classical nodes¶

To encode real-valued vectors into the amplitudes of a quantum state, we use a 2-qubit simulator.

dev = qml.device("default.qubit", wires=2)

State preparation is not as simple as when we represent a bitstring with a basis state. Every input x has to be translated into a set of angles which can get fed into a small routine for state preparation. To simplify things a bit, we will work with data from the positive subspace, so that we can ignore signs (which would require another cascade of rotations around the z axis).

The circuit is coded according to the scheme in Möttönen, et al. (2004), or—as presented for positive vectors only—in Schuld and Petruccione (2018). We had to also decompose controlled Y-axis rotations into more basic circuits following Nielsen and Chuang (2010).

def get_angles(x):

beta0 = 2 * np.arcsin(np.sqrt(x[1] ** 2) / np.sqrt(x[0] ** 2 + x[1] ** 2 + 1e-12))

beta1 = 2 * np.arcsin(np.sqrt(x[3] ** 2) / np.sqrt(x[2] ** 2 + x[3] ** 2 + 1e-12))

beta2 = 2 * np.arcsin(

np.sqrt(x[2] ** 2 + x[3] ** 2)

/ np.sqrt(x[0] ** 2 + x[1] ** 2 + x[2] ** 2 + x[3] ** 2)

)

return np.array([beta2, -beta1 / 2, beta1 / 2, -beta0 / 2, beta0 / 2])

def statepreparation(a):

qml.RY(a[0], wires=0)

qml.CNOT(wires=[0, 1])

qml.RY(a[1], wires=1)

qml.CNOT(wires=[0, 1])

qml.RY(a[2], wires=1)

qml.PauliX(wires=0)

qml.CNOT(wires=[0, 1])

qml.RY(a[3], wires=1)

qml.CNOT(wires=[0, 1])

qml.RY(a[4], wires=1)

qml.PauliX(wires=0)

Let’s test if this routine actually works.

x = np.array([0.53896774, 0.79503606, 0.27826503, 0.0], requires_grad=False)

ang = get_angles(x)

@qml.qnode(dev, interface="autograd")

def test(angles):

statepreparation(angles)

return qml.expval(qml.PauliZ(0))

test(ang)

print("x : ", x)

print("angles : ", ang)

print("amplitude vector: ", np.real(dev.state))

Out:

x : [0.53896774 0.79503606 0.27826503 0. ]

angles : [ 0.56397465 -0. 0. -0.97504604 0.97504604]

amplitude vector: [ 0.53896774 0.79503606 0.27826503 -0. ]

Note that the default.qubit simulator provides a shortcut to

statepreparation with the command

qml.QubitStateVector(x, wires=[0, 1]). However, some devices may not

support an arbitrary state-preparation routine.

Since we are working with only 2 qubits now, we need to update the layer function as well.

The variational classifier model and its cost remain essentially the same, but we have to reload them with the new state preparation and layer functions.

@qml.qnode(dev, interface="autograd")

def circuit(weights, angles):

statepreparation(angles)

for W in weights:

layer(W)

return qml.expval(qml.PauliZ(0))

def variational_classifier(weights, bias, angles):

return circuit(weights, angles) + bias

def cost(weights, bias, features, labels):

predictions = [variational_classifier(weights, bias, f) for f in features]

return square_loss(labels, predictions)

Data¶

We then load the Iris data set. There is a bit of preprocessing to do in

order to encode the inputs into the amplitudes of a quantum state. In

the last preprocessing step, we translate the inputs x to rotation

angles using the get_angles function we defined above.

Note

The Iris dataset can be downloaded

here and should be placed

in the subfolder variational_classifer/data.

data = np.loadtxt("variational_classifier/data/iris_classes1and2_scaled.txt")

X = data[:, 0:2]

print("First X sample (original) :", X[0])

# pad the vectors to size 2^2 with constant values

padding = 0.3 * np.ones((len(X), 1))

X_pad = np.c_[np.c_[X, padding], np.zeros((len(X), 1))]

print("First X sample (padded) :", X_pad[0])

# normalize each input

normalization = np.sqrt(np.sum(X_pad ** 2, -1))

X_norm = (X_pad.T / normalization).T

print("First X sample (normalized):", X_norm[0])

# angles for state preparation are new features

features = np.array([get_angles(x) for x in X_norm], requires_grad=False)

print("First features sample :", features[0])

Y = data[:, -1]

Out:

First X sample (original) : [0.4 0.75]

First X sample (padded) : [0.4 0.75 0.3 0. ]

First X sample (normalized): [0.44376016 0.83205029 0.33282012 0. ]

First features sample : [ 0.67858523 -0. 0. -1.080839 1.080839 ]

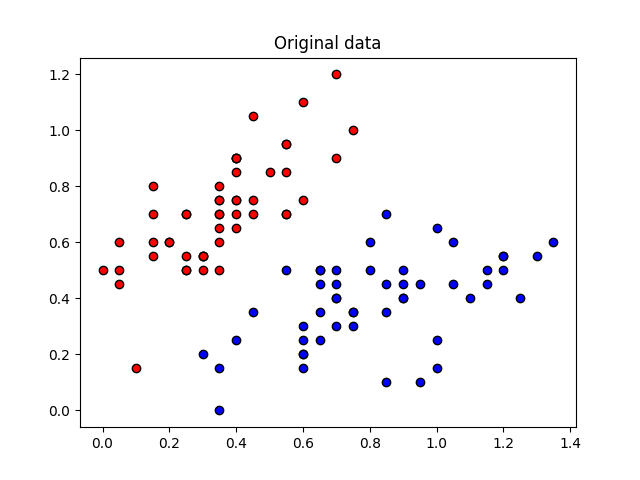

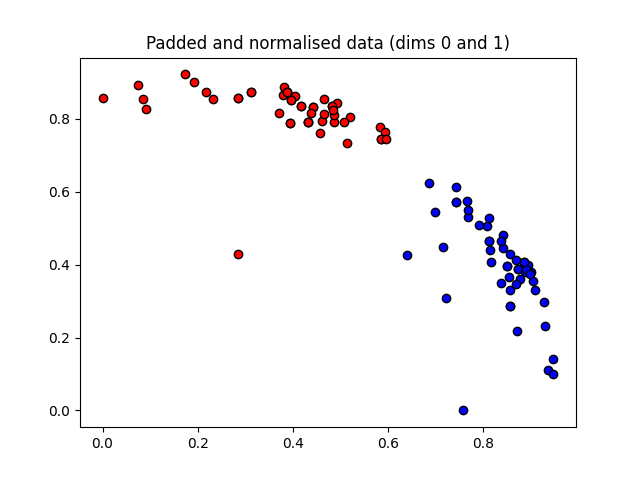

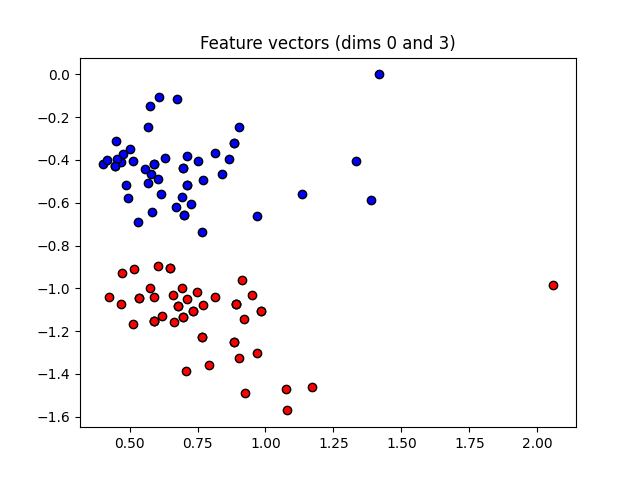

These angles are our new features, which is why we have renamed X to “features” above. Let’s plot the stages of preprocessing and play around with the dimensions (dim1, dim2). Some of them still separate the classes well, while others are less informative.

Note: To run the following code you need the matplotlib library.

import matplotlib.pyplot as plt

plt.figure()

plt.scatter(X[:, 0][Y == 1], X[:, 1][Y == 1], c="b", marker="o", edgecolors="k")

plt.scatter(X[:, 0][Y == -1], X[:, 1][Y == -1], c="r", marker="o", edgecolors="k")

plt.title("Original data")

plt.show()

plt.figure()

dim1 = 0

dim2 = 1

plt.scatter(

X_norm[:, dim1][Y == 1], X_norm[:, dim2][Y == 1], c="b", marker="o", edgecolors="k"

)

plt.scatter(

X_norm[:, dim1][Y == -1], X_norm[:, dim2][Y == -1], c="r", marker="o", edgecolors="k"

)

plt.title("Padded and normalised data (dims {} and {})".format(dim1, dim2))

plt.show()

plt.figure()

dim1 = 0

dim2 = 3

plt.scatter(

features[:, dim1][Y == 1], features[:, dim2][Y == 1], c="b", marker="o", edgecolors="k"

)

plt.scatter(

features[:, dim1][Y == -1], features[:, dim2][Y == -1], c="r", marker="o", edgecolors="k"

)

plt.title("Feature vectors (dims {} and {})".format(dim1, dim2))

plt.show()

This time we want to generalize from the data samples. To monitor the generalization performance, the data is split into training and validation set.

np.random.seed(0)

num_data = len(Y)

num_train = int(0.75 * num_data)

index = np.random.permutation(range(num_data))

feats_train = features[index[:num_train]]

Y_train = Y[index[:num_train]]

feats_val = features[index[num_train:]]

Y_val = Y[index[num_train:]]

# We need these later for plotting

X_train = X[index[:num_train]]

X_val = X[index[num_train:]]

Optimization¶

First we initialize the variables.

num_qubits = 2

num_layers = 6

weights_init = 0.01 * np.random.randn(num_layers, num_qubits, 3, requires_grad=True)

bias_init = np.array(0.0, requires_grad=True)

Again we optimize the cost. This may take a little patience.

opt = NesterovMomentumOptimizer(0.01)

batch_size = 5

# train the variational classifier

weights = weights_init

bias = bias_init

for it in range(60):

# Update the weights by one optimizer step

batch_index = np.random.randint(0, num_train, (batch_size,))

feats_train_batch = feats_train[batch_index]

Y_train_batch = Y_train[batch_index]

weights, bias, _, _ = opt.step(cost, weights, bias, feats_train_batch, Y_train_batch)

# Compute predictions on train and validation set

predictions_train = [np.sign(variational_classifier(weights, bias, f)) for f in feats_train]

predictions_val = [np.sign(variational_classifier(weights, bias, f)) for f in feats_val]

# Compute accuracy on train and validation set

acc_train = accuracy(Y_train, predictions_train)

acc_val = accuracy(Y_val, predictions_val)

print(

"Iter: {:5d} | Cost: {:0.7f} | Acc train: {:0.7f} | Acc validation: {:0.7f} "

"".format(it + 1, cost(weights, bias, features, Y), acc_train, acc_val)

)

Out:

Iter: 1 | Cost: 1.4490948 | Acc train: 0.4933333 | Acc validation: 0.5600000

Iter: 2 | Cost: 1.3312057 | Acc train: 0.4933333 | Acc validation: 0.5600000

Iter: 3 | Cost: 1.1589332 | Acc train: 0.4533333 | Acc validation: 0.5600000

Iter: 4 | Cost: 0.9806934 | Acc train: 0.4800000 | Acc validation: 0.5600000

Iter: 5 | Cost: 0.8865623 | Acc train: 0.6133333 | Acc validation: 0.7600000

Iter: 6 | Cost: 0.8580769 | Acc train: 0.6933333 | Acc validation: 0.7600000

Iter: 7 | Cost: 0.8473132 | Acc train: 0.7200000 | Acc validation: 0.6800000

Iter: 8 | Cost: 0.8177533 | Acc train: 0.7333333 | Acc validation: 0.6800000

Iter: 9 | Cost: 0.8001100 | Acc train: 0.7466667 | Acc validation: 0.6800000

Iter: 10 | Cost: 0.7681053 | Acc train: 0.8000000 | Acc validation: 0.7600000

Iter: 11 | Cost: 0.7440015 | Acc train: 0.8133333 | Acc validation: 0.9600000

Iter: 12 | Cost: 0.7583777 | Acc train: 0.6800000 | Acc validation: 0.7600000

Iter: 13 | Cost: 0.7896372 | Acc train: 0.6533333 | Acc validation: 0.7200000

Iter: 14 | Cost: 0.8397790 | Acc train: 0.6133333 | Acc validation: 0.6400000

Iter: 15 | Cost: 0.8632423 | Acc train: 0.5733333 | Acc validation: 0.6000000

Iter: 16 | Cost: 0.8693517 | Acc train: 0.5733333 | Acc validation: 0.6000000

Iter: 17 | Cost: 0.8350625 | Acc train: 0.6266667 | Acc validation: 0.6400000

Iter: 18 | Cost: 0.7966558 | Acc train: 0.6266667 | Acc validation: 0.6400000

Iter: 19 | Cost: 0.7563381 | Acc train: 0.6800000 | Acc validation: 0.7600000

Iter: 20 | Cost: 0.7315459 | Acc train: 0.7733333 | Acc validation: 0.8000000

Iter: 21 | Cost: 0.7182359 | Acc train: 0.8800000 | Acc validation: 0.9600000

Iter: 22 | Cost: 0.7339132 | Acc train: 0.8133333 | Acc validation: 0.7200000

Iter: 23 | Cost: 0.7498571 | Acc train: 0.6533333 | Acc validation: 0.6400000

Iter: 24 | Cost: 0.7593763 | Acc train: 0.6000000 | Acc validation: 0.6400000

Iter: 25 | Cost: 0.7423832 | Acc train: 0.6800000 | Acc validation: 0.6400000

Iter: 26 | Cost: 0.7361186 | Acc train: 0.7333333 | Acc validation: 0.6800000

Iter: 27 | Cost: 0.7300201 | Acc train: 0.7600000 | Acc validation: 0.6800000

Iter: 28 | Cost: 0.7360923 | Acc train: 0.6666667 | Acc validation: 0.6400000

Iter: 29 | Cost: 0.7371325 | Acc train: 0.6533333 | Acc validation: 0.6400000

Iter: 30 | Cost: 0.7234520 | Acc train: 0.7466667 | Acc validation: 0.6800000

Iter: 31 | Cost: 0.7112089 | Acc train: 0.8133333 | Acc validation: 0.7600000

Iter: 32 | Cost: 0.6923495 | Acc train: 0.9200000 | Acc validation: 0.9200000

Iter: 33 | Cost: 0.6792510 | Acc train: 0.9200000 | Acc validation: 1.0000000

Iter: 34 | Cost: 0.6756795 | Acc train: 0.9200000 | Acc validation: 0.9600000

Iter: 35 | Cost: 0.6787274 | Acc train: 0.8933333 | Acc validation: 0.8000000

Iter: 36 | Cost: 0.6938181 | Acc train: 0.7466667 | Acc validation: 0.6800000

Iter: 37 | Cost: 0.6765225 | Acc train: 0.8266667 | Acc validation: 0.8000000

Iter: 38 | Cost: 0.6708057 | Acc train: 0.8266667 | Acc validation: 0.8000000

Iter: 39 | Cost: 0.6740248 | Acc train: 0.7600000 | Acc validation: 0.7200000

Iter: 40 | Cost: 0.6835014 | Acc train: 0.6666667 | Acc validation: 0.6400000

Iter: 41 | Cost: 0.6686811 | Acc train: 0.7333333 | Acc validation: 0.7200000

Iter: 42 | Cost: 0.6217025 | Acc train: 0.8933333 | Acc validation: 0.8400000

Iter: 43 | Cost: 0.6004307 | Acc train: 0.9066667 | Acc validation: 0.9200000

Iter: 44 | Cost: 0.5910768 | Acc train: 0.9066667 | Acc validation: 0.9200000

Iter: 45 | Cost: 0.5694770 | Acc train: 0.9200000 | Acc validation: 0.9600000

Iter: 46 | Cost: 0.5588385 | Acc train: 0.9200000 | Acc validation: 0.9600000

Iter: 47 | Cost: 0.5381473 | Acc train: 0.9466667 | Acc validation: 1.0000000

Iter: 48 | Cost: 0.5205658 | Acc train: 0.9466667 | Acc validation: 1.0000000

Iter: 49 | Cost: 0.5064983 | Acc train: 0.9466667 | Acc validation: 1.0000000

Iter: 50 | Cost: 0.4969733 | Acc train: 0.9466667 | Acc validation: 1.0000000

Iter: 51 | Cost: 0.4782257 | Acc train: 0.9466667 | Acc validation: 1.0000000

Iter: 52 | Cost: 0.4536953 | Acc train: 0.9600000 | Acc validation: 1.0000000

Iter: 53 | Cost: 0.4350300 | Acc train: 1.0000000 | Acc validation: 1.0000000

Iter: 54 | Cost: 0.4272909 | Acc train: 0.9733333 | Acc validation: 0.9600000

Iter: 55 | Cost: 0.4288929 | Acc train: 0.9466667 | Acc validation: 0.9200000

Iter: 56 | Cost: 0.4261037 | Acc train: 0.9333333 | Acc validation: 0.9200000

Iter: 57 | Cost: 0.4082663 | Acc train: 0.9466667 | Acc validation: 0.9200000

Iter: 58 | Cost: 0.3698736 | Acc train: 0.9600000 | Acc validation: 0.9200000

Iter: 59 | Cost: 0.3420686 | Acc train: 1.0000000 | Acc validation: 1.0000000

Iter: 60 | Cost: 0.3253480 | Acc train: 1.0000000 | Acc validation: 1.0000000

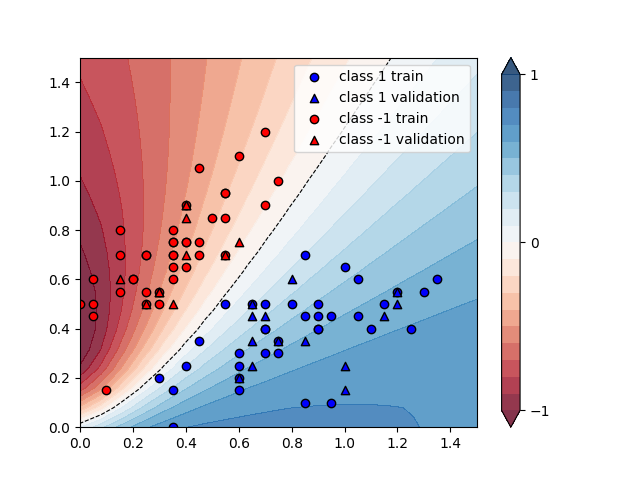

We can plot the continuous output of the variational classifier for the first two dimensions of the Iris data set.

plt.figure()

cm = plt.cm.RdBu

# make data for decision regions

xx, yy = np.meshgrid(np.linspace(0.0, 1.5, 20), np.linspace(0.0, 1.5, 20))

X_grid = [np.array([x, y]) for x, y in zip(xx.flatten(), yy.flatten())]

# preprocess grid points like data inputs above

padding = 0.3 * np.ones((len(X_grid), 1))

X_grid = np.c_[np.c_[X_grid, padding], np.zeros((len(X_grid), 1))] # pad each input

normalization = np.sqrt(np.sum(X_grid ** 2, -1))

X_grid = (X_grid.T / normalization).T # normalize each input

features_grid = np.array(

[get_angles(x) for x in X_grid]

) # angles for state preparation are new features

predictions_grid = [variational_classifier(weights, bias, f) for f in features_grid]

Z = np.reshape(predictions_grid, xx.shape)

# plot decision regions

cnt = plt.contourf(

xx, yy, Z, levels=np.arange(-1, 1.1, 0.1), cmap=cm, alpha=0.8, extend="both"

)

plt.contour(

xx, yy, Z, levels=[0.0], colors=("black",), linestyles=("--",), linewidths=(0.8,)

)

plt.colorbar(cnt, ticks=[-1, 0, 1])

# plot data

plt.scatter(

X_train[:, 0][Y_train == 1],

X_train[:, 1][Y_train == 1],

c="b",

marker="o",

edgecolors="k",

label="class 1 train",

)

plt.scatter(

X_val[:, 0][Y_val == 1],

X_val[:, 1][Y_val == 1],

c="b",

marker="^",

edgecolors="k",

label="class 1 validation",

)

plt.scatter(

X_train[:, 0][Y_train == -1],

X_train[:, 1][Y_train == -1],

c="r",

marker="o",

edgecolors="k",

label="class -1 train",

)

plt.scatter(

X_val[:, 0][Y_val == -1],

X_val[:, 1][Y_val == -1],

c="r",

marker="^",

edgecolors="k",

label="class -1 validation",

)

plt.legend()

plt.show()